Audio captchas

Distinguishing man and machine on the Internet

On many websites users are prompted to prove that they are human by entering symbols that are difficult to read. In case of partially sighted people, audio captchas are used, but there’s room for improvement.

Anyone who surfs on the Internet will sooner or later encounter captchas. The purpose of those small boxes with barely legible letters and symbols is to distinguish human Internet users from machines – the latter of which may clutter our email inboxes with spam.

Distorted language instead of barely legible letters

For sighted people, entering them is a nuisance. The symbols are often so hard to read that several time-wasting attempts are required. For partially sighted people, on the other hand, they are a real problem. The solution is audio captchas. They consist of synthetically generated and more or less distorted sequence of words, letters, or digits that the user has to transcribe using the keyboard.

Sighted users often don’t even notice audio captchas on the Internet. Not every website has one, and where they are provided, they are often hidden behind a small button, which does not immediately catch the eye. Audio captchas frequently don’t work very well; human users have difficulties in solving them, whereas computers often show superior performance on the task.

Machines must not understand the captchas

Prof Dr Dorothea Kolossa and her PhD student Hendrik Meutzner from the research group Cognitive Signal Processing at the Institute of Communication Acoustics are investigating the development of secure and usable audio captchas. There’s no need to worry when creepily distorted sounds with strong echo emerge from Meutzner’s office. The 32-year-old academic is spending a lot of time listening to audio captchas. For untrained listeners, they are often very difficult to understand.

“The challenge is to make the signals so difficult that they will constitute a stumbling block for machines, and at the same time so easy that humans will be able to solve the task without any problems,” says Dorothea Kolossa.

The machines in question are automatic speech recognition systems. Such systems are familiar from satnav devices or mobile phones that react to voice commands. “The characteristics of sound and the speech patterns in commonly used audio captcha are often very similar and repeating. That makes it especially easy for attackers to create suitable models for them and to train their recognition systems accordingly,” explains Meutzner.

In order to improve audio captchas, he and Dorothea Kolossa systematically exploit the differences between humans and automatic speech recognition systems. This also includes studying neurophysiological principles. The researchers want to understand how the human brain processes incoming speech signals and in what respect it is one step ahead of technology.

How humans process acoustic signals

“Understanding, for example, how humans separate two or more simultaneous acoustic signals would shed light on the issue,” says Dorothea Kolossa. If they are presented via both ears, humans are able to distinguish up to five simultaneous signals. Experts refer to this effect as auditory streaming.

It is facilitated by, among other things, the time difference between the sounds reaching each ear. Moreover, the skull between the ears absorbs the signals to some extent so that the volume is different at each ear most of the time. In order to better understand auditory streaming, Dorothea Kolossa entered a collaboration with the University of California, Berkeley. In their joint work, the researchers are analysing the neuronal signals from the inner ear to the auditory cortex, where actual speech recognition may be said to take place.

Novel audio captchas

For the development of new audio captchas, Hendrik Meutzner exploits this advantage of humans. One of his captchas presents a sequence of numbers to the listener, with two of them always partially overlapping. In addition, an echo makes it more difficult to understand what is being said.

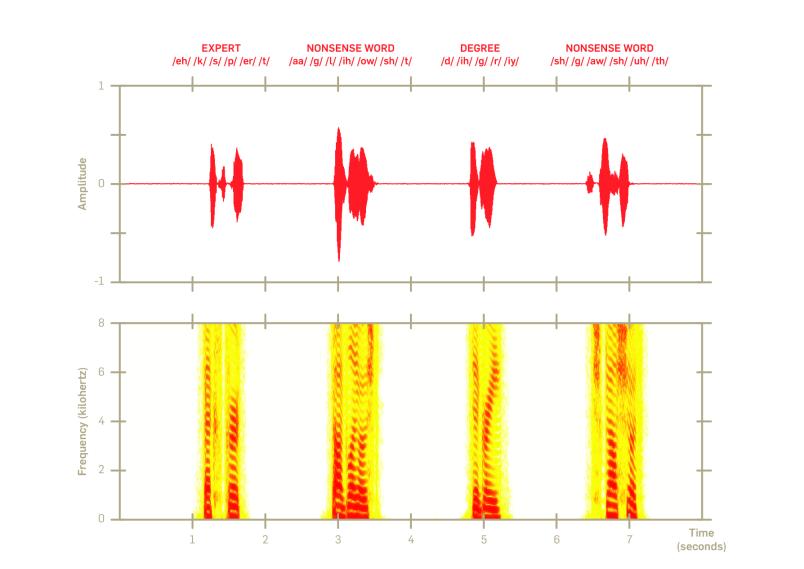

Another of his captchas makes use of human speech comprehension. The captcha presents a sequence of words to the listener, some of which make sense, while the rest is gibberish. A human will be able to spot and recognise the meaningful words. The machine will find the task difficult, because the meaningful words and the gibberish sounds exhibit very similar characteristics in the time-frequency domain.

[soundcloud:1]

For this captcha, the human success rate was measured to be 60 percent, as opposed to 14 percent in machines. In contrast, for an audio captcha that is currently deployed by a large online search engine, the success rate for humans only reaches 24 percent. Achieving a success rate of 63 percent, machines can easily outperform human listeners for this specific type of captcha. This is yet another insight that Meutzner gained in his tests.

In order to test how easy it is for humans to solve his captchas, Hendrik Meutzner uses two different methods: first, he invites test participants to the in-house audiometry lab at the institute and asks them to solve captchas. For evaluating a large number of captchas, he runs his tests on a dedicated crowdsourcing platform.

This approach is gaining more and more popularity in academia.

Hendrik Meutzner

“This approach is gaining more and more popularity in academia, as lab-based experiments require a lot of time and effort to find participants on location and conduct the tests with them. The crowdsourcing platform enables us to recruit a large number of test persons with comparative ease,” Meutzner points out. Compromises with regard to the quality of the test results are sometimes necessary. “In the lab, test participants often concentrate better, and the overall conditions, such as acoustics and technical equipment, are better and more controlled. But the combination of both methods is ideal for our purposes.”