Electrical Engineering

Comprehensible speech despite noisy surroundings

Hearing aids are meant to transmit speech intelligibly – even if it is loud in the environment. Bochum-based researchers have gained new insights to support the development of better devices.

“Yes, I can hear you,” shouts many a caller into their mobile, while a road sweeper driving past at the other end of the line interferes with the conversation. This is because the microphone transfers not only relevant sounds, i.e. speech, but also other ambient noises. Hearing aids follow the same principle. A hearing aid in a packed restaurant transfers not only the conversation partner’s words, but also the voices of other patrons and the clatter of dishes.

Specific algorithms in hearing aids are to render speech more intelligible by filtering out ambient noises – a considerable challenge for researchers and industrial developers. The quality of the transferred speech must not suffer when background noises are filtered out.

Facilitating the development process of hearing aids

Prof Dr Dorothea Kolossa and Mahdie Karbasi from the RUB research group Cognitive Signal Processing have been studying prediction of speech intelligibility in the course of the project “Improved Communication through Applied Hearing Research“ (I can hear). Their findings might help facilitate the development process of hearing aids. “Once we understand how well or how poorly people understand spoken words in different situations, we will be able to optimise hearing aids,” says Kolossa.

In the first stage, new algorithms must undergo time-consuming series of tests, before they can be deployed in hearing aids. How well does the algorithm filter our background noises, and is the quality of speech preserved? Regardless if the problem is a room where sounds reverberates or loud background noises – the algorithms are supposed to ensure that future hearing aids will transfer speech in a consistently comprehensible manner.

The Bochum-based researchers’ findings may help reduce the time-consuming algorithm tests. Developed by Kolossa and Karbasi, the algorithm has the capability to estimate how well a human can understand speech – subject to his or her perception threshold. This technology thus supplies a lot of information that would otherwise have to be established in time-consuming hearing tests. “It would be unrealistic to assume that we can forgo hearing tests altogether in future. However, the product development phase could be reduced,” says Kolossa. The researchers aim at keeping the difference between prediction and hearing test results as slight as possible. The smaller the difference, the better the prediction and, consequently, the relevant algorithm.

Participants evaluated audio data

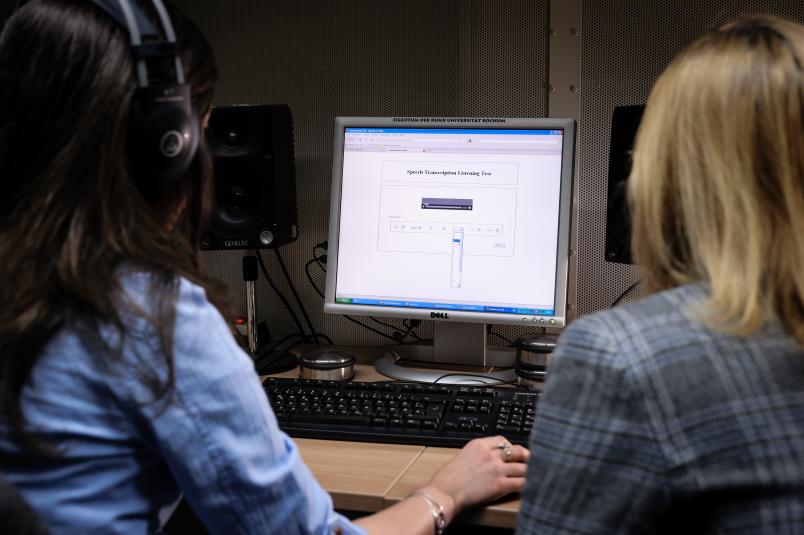

To date, the RUB researchers have been conducting tests with people with normal hearing ability in order to predict speech intelligibility. To this end, they asked 849 participants to evaluate audio data via an online platform.

Everyone listened to approximately 50 sentences with simple structures. In a drop-down menu, the participants selected which words they understood in which position in the sentence. The sentences that were played to them contained interfering noises in varying degrees or no background noises at all. With the aid of their algorithm, the researchers predicted the percentage of the sentence that the participants would understand. Subsequently, they compared this automatically estimated value with the results compiled in the tests. Their algorithm provided a more realistic estimation of speech intelligibility than a standard method.

Typically, researchers test speech intelligibility using the STOI method (short time objective speech intelligibility measure) or with other so-termed reference-based methods. Unlike the approach developed by Kolossa and Karbasi, these methods require a clear original signal, i.e. an audio track, that’s been recorded without any background noises.

In real life, however, conversations rarely take place without any ambient noises. People almost always are surrounded by sounds of some sort: the hissing of radiators, rain drops beating against the windowpane, or engine noises.

The standard method has another snag: it assesses speech intelligibility based on the difference between original sound and filtered sound. Let’s assume the test of the original file would be read by a speech software such as Siri. Considering today’s technological standards, the high and clear computer voice would probably be very intelligible. If, however, one compared it to the original file recorded in a lower-pitched, masculine voice, the track spoken by the speech software would be assessed as less intelligible, according to the STOI method. The reason would be the different pitches, rather than the quality of intelligibility.

This is why the researchers from Bochum decided to forego an original file in their study. “The unique aspect of our test was that we only used the distorted file and did not compare it with a clean audio file,” explains Kolossa. This approach resulted in a more accurate prediction than the STOI method. Another benefit is: “Our model also has the option to factor in the hearing impairment directly in the intelligibility prediction in follow-up tests.”

In order to study this area of application, Karbasi is testing the method in a project involving people with hearing impairments. In the best case scenario, the study will provide methods for optimising hearing aids so that they automatically recognise the wearer’s current surroundings and situation. If he or she steps from a quiet street into a pub, the hearing aid should automatically register an increase in background noises. Accordingly, it would filter out the ambient noises, ideally without impairing the quality of the speech signal. The researchers aim at developing algorithms that can assess and optimise speech intelligibility in accordance with the individual perception threshold or type of hearing impairment.

Less clatter of dishes, no noisy conversation at the next table. The hearing aid would become an intelligent tool that renders speech more comprehensible to people with hearing impairments in every situation.