Blind people’s radar Follow the sound

The ability of experiencing the world like a bat and finding your way around without visual cues would be a great help for visually impaired people. Engineers are trying to make it happen.

A faint sound, like a gently plucked string, provides the only point of reference in the room. My eyes closed, my ears covered by headphones, I push a button to manoeuvre around a labyrinthine digital hallway. In short intervals, I hear a sound in front of me. Pushing the button, I move one step towards it. Now, the sound is a bit more to the left. I turn a fraction towards it until it’s once again in the centre in front of me. Another step. It remains in the centre. Another step.

Thus, the sound guides me safely through the hallway. I don’t hit anything, don’t walk into any walls. I get more and more confident and walk more quickly. Too quickly, it seems – a sound alerts me. I stop, regain orientation. The sound is in the centre in front of me, I walk on.

Intuitive use

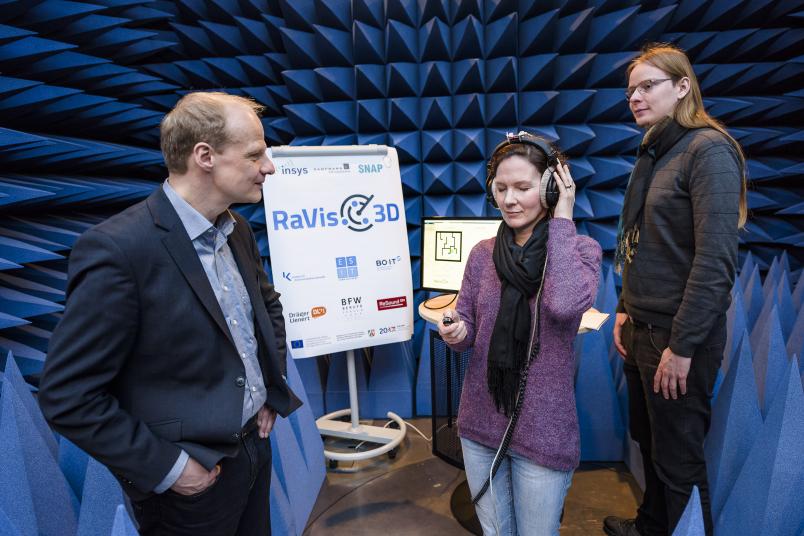

I’m surprised how intuitive the system is, seeing as assistant professor Dr Gerald Enzner from the Institute of Communication Acoustics deliberately kept the briefing short. Together with Christoph Urbanietz, he set up the so-called sonification simulator in an anechoic chamber in the basement of the ID building. “We like to use this space, because it blocks out ambient acoustics, thus enabling us to focus on the leading sound during the current preliminary development phase,” he explains.

That sound, the gently plucked string, had been selected by Christoph Urbanietz – it had been an intuitive choice that proved successful, according to his tongue-in-cheek explanation. It was a lucky find in two respects, as it is short and easy to localise. Moreover, it is pleasant and inviting. It’s not irritating. Therefore, it’s easy to turn towards it and follow it. Whereas the alert sound is dissonant and repellent.

A lot of technology has still to be worked out, refined and reduced to create the sound signal that is supposed to guide blind people through unfamiliar terrain one day.

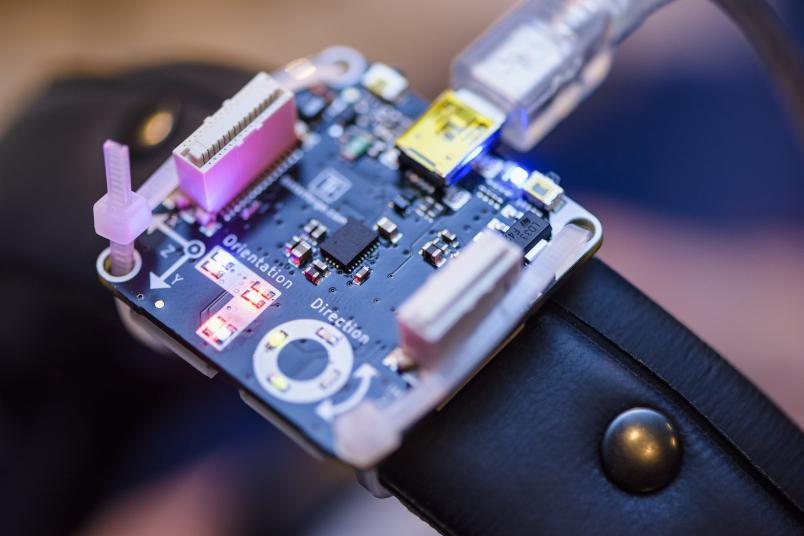

The researchers are planning to achieve that in the course of the project Ravis-3D, which has been running for more than six months to date. The sonification simulator translates a virtual layout in acoustic signals. It receives feedback on the user’s movements via a motion sensor in the headphones and a pointer instrument that is used to indicate each imaginary step by pushing the button. Later, the wearer of the system moves around in a real room, and sensors are deployed to record his or her movements.

System to be reduced

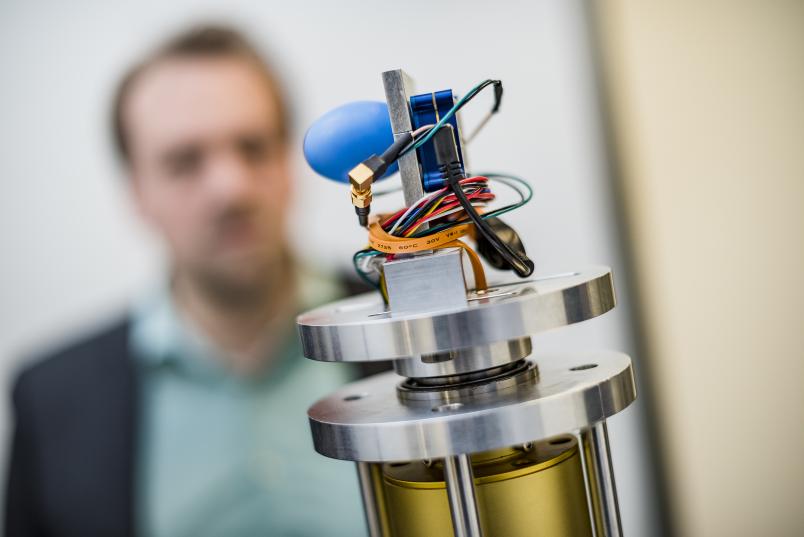

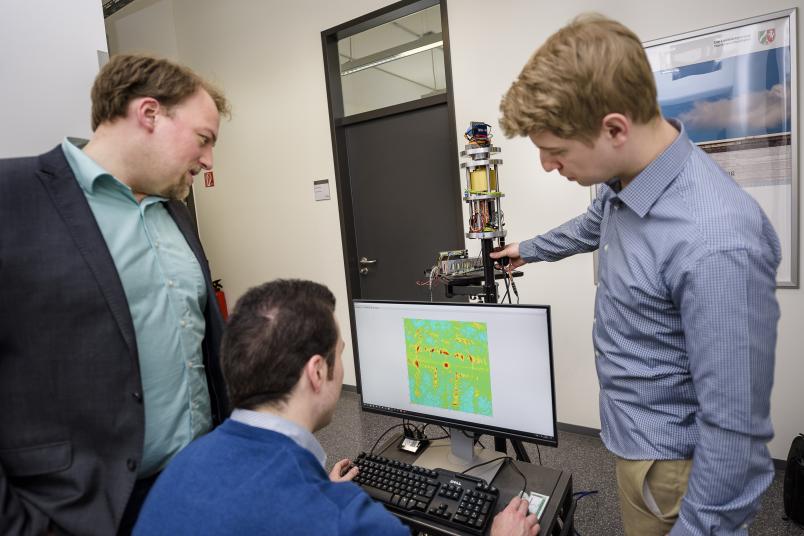

The virtual layout that is used to generate the sound is based on a radar scan of the environment. I have observed the principle being applied in a hallway of the ID building. Here, the team headed by Prof Dr Nils Pohl from the Chair for Integrated Systems had set up a head-high tripod, on top of which a complicated instrument is continuously pivoting and emitting soft twittering noises. As Nils Pohl explains, most of the components of the device are only there to turn the upper part. The respective electric engines generate the sound. “The interesting element is the radar sensor on top. Integrated in a microchip, it is a mere two millimetres across,” says Pohl.

The little blue egg is a radar antenna; behind it in a blue box is the sensor. It was developed by the engineers from Bochum, who have grand plans for it. After reducing it in size, they intend to use it to record the field of vision necessary to gain orientation without pivoting the antenna. The question if an angle of 30 or 60 degrees or yet a different one will be necessary remains to be answered. One thing is certain: the entire system has to be small enough to be integrated in a headband, glasses or the knob of a mobility cane.

The radar measures our environment, which is then rendered visible on the screen of a computer connected to the system. Red and yellow patterns are rendered visible on predominantly greenish background. They represent items that reflect electromagnetic waves that are emitted by the radar and subsequently recorded by the sensor. “This blotch here, that’s me,” points out Nils Pohl and takes a step to the side. The moment the radar system pivots again, the image refreshes and his dot shifts on screen. Walls are rendered as irregular rows of shapeless patches. Thicker blotches are, for example, door frames. “Because of its excellent reflecting properties, metal is represented more strongly,” says Nils Pohl.

Different application planned originally

The researchers actually had a different application in mind for their radar system. They have, for example, developed level control systems for industrial tanks and components for self-driving cars. In the process, a member of the department, Timo Jaeschke, had the idea to deploy the technology as orientation aid for visually impaired people.

Even now, at the very beginning of the three-year project, the idea is fascinating – not just to me, but also to many representatives of the media who had visited the researchers. “And yet it will take another two years before we will have something to show for,” says Nils Pohl.

The team still has a lot of work to do. Many questions remain open – not just with respect to technology. The simulation, for example, works like a navigation system. My sound can guide me only because it knows where I want to go. But what happens if a visually impaired person gets off the bus somewhere and doesn’t know where to go? “We’re working on it,” concludes Gerald Enzner.