Communication networks

Bypassing the traffic jams on the information superhighway

In order to travel from A to B in Berlin, you don’t need a local taxi driver. Your cab could just as well be driven from Brazil – provided that the data packets are delivered reliably and, above all, quickly.

A taxi driver navigating his cab through the city. A surgeon performing a procedure at the operating table. The crew in the control tower monitoring and directing air traffic. We instinctively place each of these pairs in close physical proximity to each other. “But these close spatial relationships are in the process of dissolving,” points out Professor Steffen Bondorf. The computer scientist heads the Chair of Distributed and Networked Systems in the Faculty of Computer Science at Ruhr University Bochum. “Calling a hotline makes us think the person is somewhere close to us but that’s not necessarily the case nowadays. Such call centers are quite often located on a different continent.”

What’s more, thanks to the internet, it’s possible to devise and even implement new ways of decoupling still existing physical proximity requirements. Why should control tower operators be tied to the airport? They could control and direct air traffic remotely via monitors, just as they do on site. The taxi could be navigated through Berlin from Rio de Janeiro. The doctor could control her surgical instruments from anywhere, saving patients a stressful and time-consuming trip to a specialist clinic.

When the image freezes due to a lagging data stream

This scenario might make some people feel a little bit uneasy. After all, if you happen to stream movies or hold video conferences online, you’ll be familiar with those sudden instances of screen freeze. Annoying as it may be, it doesn’t actually put anyone’s life at risk. But a surgical procedure, taxi ride or air traffic control can be a matter of life and death. Maximum reliability and speed are essential. A delay in the transmission of the control signals could have fatal consequences.

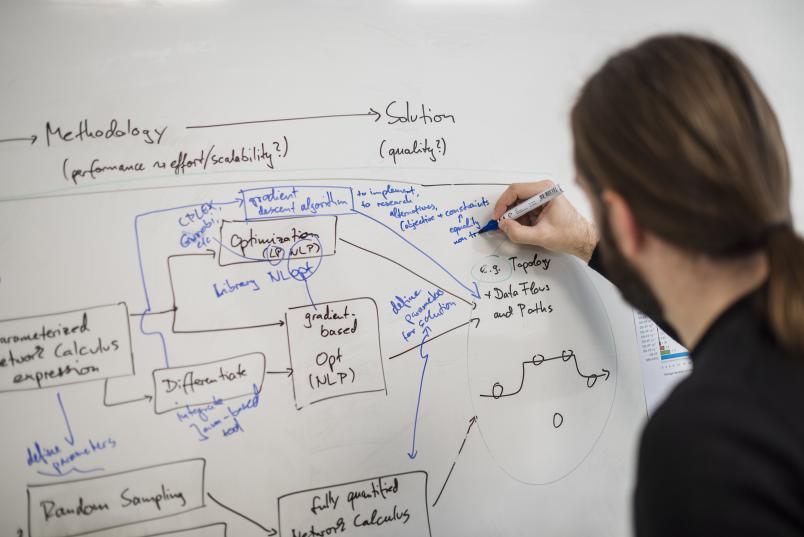

That’s exactly what Steffen Bondorf is pursuing in his research: he’s trying to provide a mathematical proof of the speed and reliability of data transmission. It is a rather complex matter: “The internet is a huge system with countless components,” points out the researcher. “We simply can’t tackle it as a whole, but have to study its individual elements.”

The more traffic, the longer the queue

When a user submits a search query to Google from their smartphone, for example, the first step is for the smartphone to send the data to the nearest cell tower. From there, it’s transmitted via a fibre-optic cable to an internet service provider’s hub that relays it to a Google server, which eventually returns a response. Since data can be copied and Google has servers on every continent, the distances are relatively short. However, control signals may have to travel as intercontinental data traffic via submarine cables. Each section of the route and each node at which they are relayed affects the speed at which they progress. Here, the same rules apply as on the highway: the more traffic there is, the longer you have to wait and the slower your progress will be.

“How the individual components behave is determined, on the one hand, by technical parameters. But they are by no means the only factor,” elaborates Steffen Bondorf. “On the other hand, standards are also defined in cooperation between researchers, hardware manufacturers and representatives of political bodies.” This includes, for example, ways of dealing with congestion on the information superhighway. “If too many data packets arrive at an internet node at the same time, they can’t all be processed immediately and will end up with a queue,” explains Steffen Bondorf. Extended waiting times there can cause our video to freeze. Standards specify which data may need to be prioritised to avoid delays. “It’s like when all cars have to wait at a red light, while the fire engine is allowed to go through,” illustrates Bondorf. Such prioritisation of data can potentially lead to conflicts. Large corporations are keen to prioritise their services and customers, to buy priority for their own data. Conversely, net neutrality advocates call for a level playing field for all data. “Be that as it may, it is possible to prioritise data,” says Steffen Bondorf. And that is relevant to his research.

700 milliseconds and that’s it

He uses what is known as the network calculus to model the path of data travelling from A to B – for example, from Rio de Janeiro, where the taxi driver is, to Berlin, where the taxi is. All stations and interfaces that the data passes through are incorporated into the model, including abstractions of their technical specifications. “One reason this works is because the organisation of the internet is strictly hierarchical,” explains the researcher. “Data traversing this hierarchical structure tends to take the shortest route to the next higher node and back again.”

For the modelled case, a transmission time from A to B is set and must not be exceeded. In the taxi example, a data packet from Rio to Berlin must not take more than 700 milliseconds. To perform the calculation, the researcher assumes error-free conditions. The analysis then produces a single value: an upper bound on the maximum delay of data packet transport. “In our example, if it’s less than 700 milliseconds, the link is proven to be fast enough,” says Bondorf. “If the value is higher, say 750 milliseconds, we can still work out what adjustments we need to make to get below the pre-defined bound. That’s where data prioritisation again comes into play.” Eight priority levels are available to the devices used on the internet. It’s possible to calculate which priority level the data packets should have in order to stay under the time limit – but this calculation is very time-consuming.

Steffen Bondorf makes his analysis tool available under a permissible open-source license. “I do assume that enterprises that operate networks or develop components will utilise the results of my research,” he says. However, it is usually difficult to obtain information from the industry as they protect their intellectual property by secrecy. Even when it comes to implementing standards, we have to trust that the companies follow the specification in its very detail, because they don’t disclose their source code. “As far as I’m concerned, the question of what’s theoretically possible is the core motivation behind my research efforts,” he concludes, reflecting on his academic interest. “I want to understand the systems.”