Research data management

“The biggest challenge is the mindset”

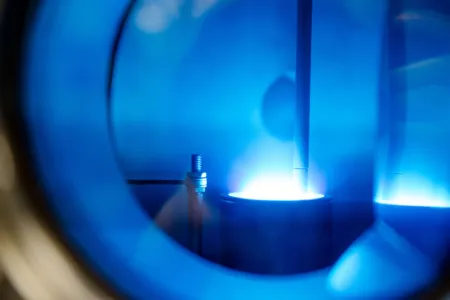

Research data management – the term has quite a technical ring to it. And yet, a social component also plays an essential role, as plasma researcher Achim von Keudell tells us.

At first glance, storing data in a structured and well-documented way doesn’t look like much of a challenge. But if you examine this task in detail, you will find that there are a number of hurdles to overcome. The team at Collaborative Research Centre (CRC) 1316 “Transient Atmospheric Pressure Plasmas” has tackled the problem. CRC spokesperson Professor Achim von Keudell tells us in an interview why it’s been a painstaking process.

Professor von Keudell, why is research data management a challenge in your field?

50 per cent of research in physics is done in large-scale research projects, for example in astrophysics or nuclear physics, where standards for data storage are in place. The rest takes place in small labs, where it’s common practice for each group to use their own programmes for data evaluation and the creation of graphics, or its own tools for data storage. In addition, the data generated from different experiments are very heterogeneous. The biggest challenge, however, is the mindset.

Why?

First of all, many people don’t understand the added value of research data management. They are worried that others could steal their data if it’s handled with too much transparency. I’ve campaigned a lot for this issue at conferences of the German Physical Society – and I was surprised how hard it is to convince people and what kind of resistance we have to overcome. It’s a bit like telling a teenager to “tidy your room” and getting the answer “Why? I know where everything is”.

Younger researchers are often open to research data management. Others often don’t see the benefits at first, because they’ve long managed without a standardised form of data storage and documentation.

We are still sitting on a dormant treasure that needs to be unearthed.

Today, however, research funding organisations and scientific journals are demanding transparency.

Yes, for the past year or two, the question of whether data has to be made accessible disappeared. The German Research Foundation demands research data management, and many journals won’t publish your paper if you don’t publish your data in a repository at the same time. And yet, we are still sitting on a dormant treasure that needs to be unearthed.

How so?

It’s not just about publishing the data in the traditional way; we have to publish them so the next generation of researchers can continue to work with them. That would be sustainable, especially if you consider that these data were produced with taxpayers’ money.

At our Collaborative Research Centre, for example, we’ve developed standards for metadata together with our colleagues: when I perform a measurement, it’s not just the results that are of interest. In order for another researcher to reproduce the data later, it may also be relevant to know what the temperature was in the lab and which measuring device was used to record the results. We’ve discussed for various experiments which metadata need to be documented and are now using a common repository in the SFB to store the data according to uniform standards.

Was it an easy thing to do?

We were in the lucky position to draw on solid groundwork laid by our colleagues at the Leibniz Institute INP in Greifswald, who had already given a lot of thought to the problem and recommended a specific open-source software. Still, as I mentioned before, the data that accumulate in different experiments vary greatly. It’s not easy to mould them into a greater format that everyone is happy with. But we now have a system used by roughly 50 people from the different fields of plasma research, ranging from technical plasmas to biotechnology, which we are continuously refining.

We’ve been seeing a change in the mindset!

And where does the plasma community as a whole stand on this issue?

We know today that the way to convince people is to demonstrate individual examples that have proved to be successful. If we only discuss the issue at the level of FAIR principles, it remains too abstract. Specific cases of application help to convince people. And we’ve been seeing a change in the mindset!

FAIR principles

Many colleagues have started to see the benefits. If we store data in a well-documented and accessible way, researchers can access a wide range of measurements and carry out cross-cutting analyses. This hasn’t been widely practised so far, but it allows for completely new insights. It has a remarkable potential.

What do you tell researchers who remain sceptical?

That it’s better to get on board right from the start than to realise ten years later that it’s been inevitable anyway and that standards have been established that you don’t agree with. If you get involved early, you have the chance to help define them!