Electrical Engineering 3D mapping of rooms using radar

One day, this technology might help find victims of natural disasters or fires.

Engineers from University Alliance Ruhr have developed novel signal processing methods for imaging and material characterisation with the aid of radar. Their long-term objective is to use these techniques in combination with radar-based localisation of objects. Their vision is a flying platform capable of generating a three-dimensional representation of its surroundings. The technology might be, for example, useful for finding out what firefighters might encounter behind clouds of smoke in a burning building.

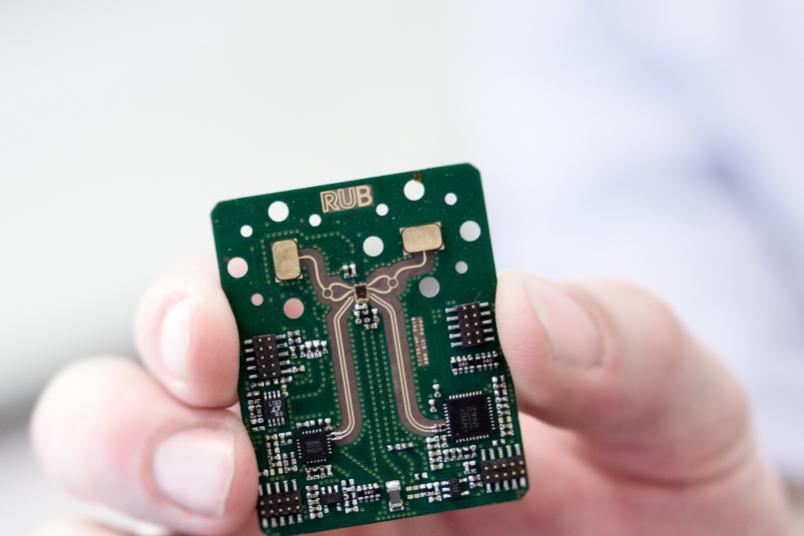

To this end, researchers from Ruhr-Universität Bochum (RUB) and the University of Duisburg-Essen (UDE) have joined forces with other institutions under the umbrella of the collaborative research centre/transregio 196 “Marie”, short for “Mobile Material Characterisation and Localisation by Electromagnetic Sensing”. A report has been published in Rubin, Bochum’s science magazine.

One measurement technique, a wealth of information

In principal, the same measurement technique should be suitable for material characterisation as well as localisation. However, it is not yet being used simultaneously for both. The measurement principle is as follows: a radar emits electromagnetic waves that are reflected by objects. Broadly speaking, it is possible to calculate how far away an object is located based on the delay between the transmitted and returning signals.

Additionally, the returning waves provide even more information. The strength of the reflected signal is determined by the size of the object, by its shape, and by its material properties. A material parameter, the so-called relative permittivity, describes a material’s response to an electromagnetic field. Calculating the relative permittivity, researchers can thus figure out what kind of material the object is made of.

Real-time analysis possible

Converting a radar signal into an informative image requires huge computational cost. The recorded data can be compared to those of a camera that lacks a lens for focusing. Focusing is subsequently carried out at the computer. Dr Jan Barowski has developed algorithms for this process during his PhD research at the Institute of Microwave Systems in Bochum, headed by Prof Dr Ilona Rolfes. “When I first started out, such corrections used to take us ten hours,” the engineer says. Today, the analysis is performed in real time via a laptop graphics card. Not only do Barowski’s algorithms take care of focusing, but they also eliminate systemic measurement errors from the data.

Under controlled lab conditions, the current system is able to determine the position of an object and that it is made of a different material than, for example, the surface on which it lies. In the next step, the engineers intend to enable the system to recognise what the object actually is. They already have the capability of specifying the relative permittivity of synthetic materials, even though they cannot yet distinguish between different synthetics. The project partners intend to gradually optimise the system for application under realistic conditions.