IT Security

How Common Are Fake Profile Pictures on Twitter?

Automated social media profiles are a powerful tool for spreading propaganda. They often feature AI-generated profile pictures. Researchers are currently looking into ways of identifying such images.

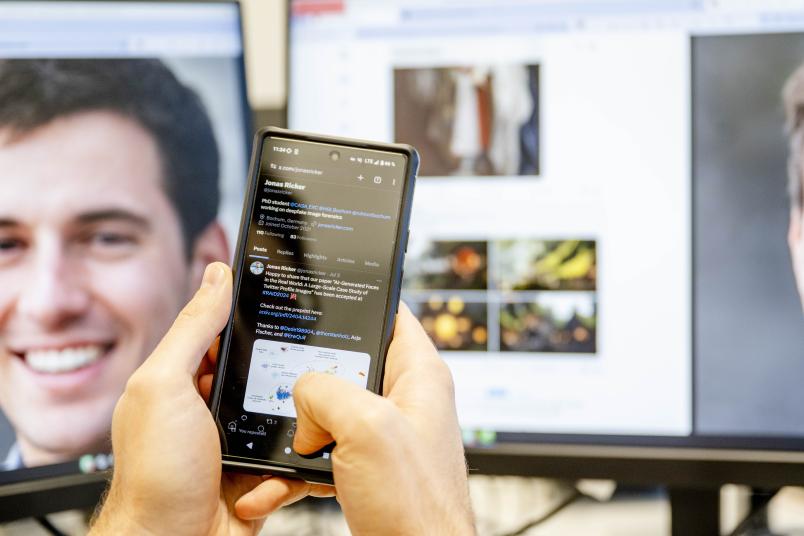

Researchers from Bochum joined forces with their colleagues from Cologne and Saarbrücken in order to investigate the number of profile pictures generated using artificial intelligence (AI) on the social network Twitter (today renamed X). They analyzed almost 15 million accounts and found that 0.052 percent of them used an AI-generated picture of a person as an avatar. “That may not sound like much, but such images feature prominently on Twitter,” says lead author Jonas Ricker from Ruhr University Bochum, Germany. “Our analyses also indicate that many of the accounts are fake profiles that spread, for example, political propaganda and conspiracy theories.” The team presented their findings on October 1, 2024 in Padua at the 27th International Symposium on Research in Attacks, Intrusions and Defenses (RAID).

For their study, the researchers from Ruhr University Bochum teamed up with colleagues from the CISPA Helmholtz Center for Information Security in Saarbrücken and the GESIS – Leibniz-Institute for the Social Sciences in Cologne. The research was funded as part of the Cluster of Excellence “Cybersecurity in the Age of Large-Scale Adversaries” (CASA).

Thousands of accounts with fake profile pictures

“The current iteration of AI allows us to create deceptively real-looking images that can be leveraged on social media to create accounts that appear to be real,” explains Jonas Ricker. To date, little research has been carried out into how widespread such AI-generated profile pictures are. The researchers trained an artificial intelligence tool to distinguish between real and AI-generated images. This model was used to perform an automated analysis of the profile pictures of around 15 million Twitter accounts, drawing on data collected in March 2023. After excluding all accounts that didn’t have a portrait image as an avatar from their data set, the researchers left approximately 43 percent of all accounts in the analysis. The model classified 7,723 of these as AI-generated. In addition, the researchers performed randomized manual checks to verify the results.

In the next step, the team examined how accounts with AI-generated images behaved on the platform compared to accounts with pictures of real people. Fake picture accounts had fewer followers on average and followed fewer accounts in return. “We also noticed that more than half of the accounts with fake images were first created in 2023; in some cases, hundreds of accounts were set up in a matter of hours – a clear indication that they weren’t real users,” concludes Jonas Ricker. Nine months after the initial data collection, the researchers checked whether the fake picture accounts and an equal number of real picture accounts were still active and found that over half of the fake picture accounts had been blocked by Twitter at this point. “That’s yet another indication that these are accounts that have acted in bad faith,” says Ricker.

Disinformation and political propaganda

The researchers also analyzed the content that the fake image accounts spread on Twitter. Some recurring themes emerged, such as politics – often with a reference to Trump –, Covid-19 and vaccinations, the war in Ukraine, lotteries and finance, including cryptocurrencies. “We can only speculate what’s going on there,” says Jonas Ricker. “But it’s fair to assume that some accounts were created to spread targeted disinformation and political propaganda.”

Going forward, the team plans to continue working on the automated detection of fake images, including those generated by more recent AI models. In the current study, the researchers limited themselves to the “StyleGAN 2” model, which is what the website thispersondoesnotexist.com is based on. This website can be used to generate fake images of people with just one click. “We assume that this site is often used to produce AI-generated profile pictures,” says Ricker. “This is because such AI-generated images are more difficult to trace than when someone uses a real image of a stranger as their avatar.”